Is our integrity as a respected academic institution at risk? Holy Family is known for its excellent business, nursing, and education programs. The advent of artificial intelligence programs raises issues of academic dishonesty at our school. Should an accountant have finished their education by relying on AI, and who would trust them with handling important financial decisions if they had? Would someone want to be treated by nurses that do not know how to calculate proper dosages for medicines in the emergency room? Or teachers that cannot hold themselves to the same standards they expect of their students, never showing mastery in the areas that they teach? Our school’s motto is teneor votis, “I am bound by my responsibilities”, and our very responsibility in academics is in question with the rise, and accessibility, of AI tools.

In recent years there have been an increasing amount of uses for artificial intelligence. Every time I turn my head, there are new advances in this sector of technology, and more ways for it to affect education. OpenAI, a big name in the AI arena, recently unveiled a “cheat detector” for use by educators. OpenAI’s platform, ChatGPT, has become the de facto leader in this zeitgeist. Outside of the realm of education, the fear is mounting that AI will be taking jobs from humans in an already-ailing job market where the once marketable skills of the past are usurped by technological advancements of the future. This fear has only been exacerbated by AI seeping its way into every facet of life. AI has not allowed humans to quit their jobs and do what they love all the time, as a utopian idealist might envision, but instead the opposite; AI is creating art, replacing jobs that are needed by humans, and causing a stir in academia.

In higher academia, AI is a new way of cheating that is particularly potent and widespread. What used to be the job of a site where an educator looking for some extra cash on the side was contracted to write papers for struggling students, is now as simple as typing in a prompt into a search box and copy-pasting the results into Word.

As a member of Tiger Tutoring, I’ve witnessed how students are using, and in some cases, abusing, AI features to do their work. HFU has trained us tutors how to use AI to help in our sessions during a staff meeting, but this message has not yet been transferred to students.

There are known use cases for AI that are appropriate. Generative AI can help brainstorm ideas, not much different than performing a Google Search to see what information is out there about a particular topic. Holy Family is hoping to capitalize on the proper uses of this technology and implement it into more courses in order to stay relevant in a rapidly changing technological landscape.

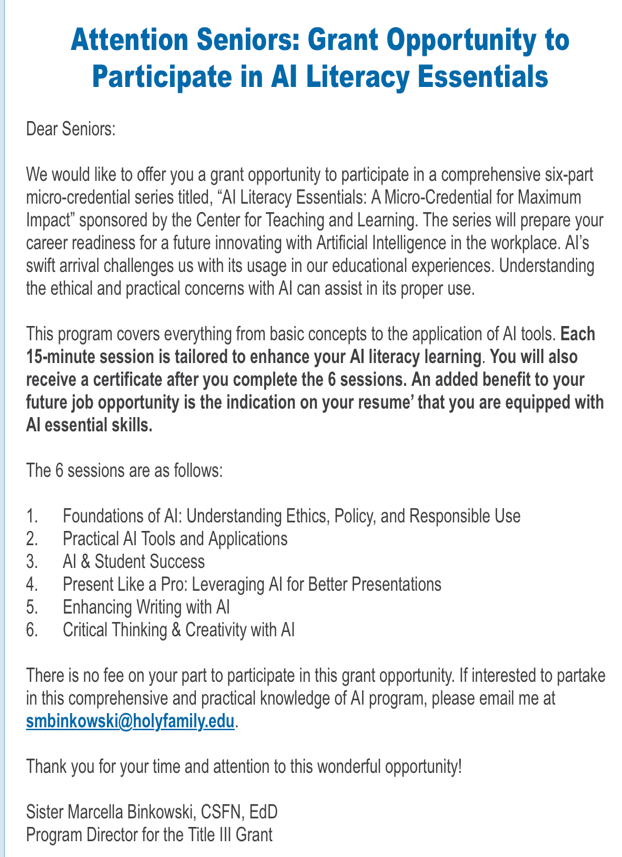

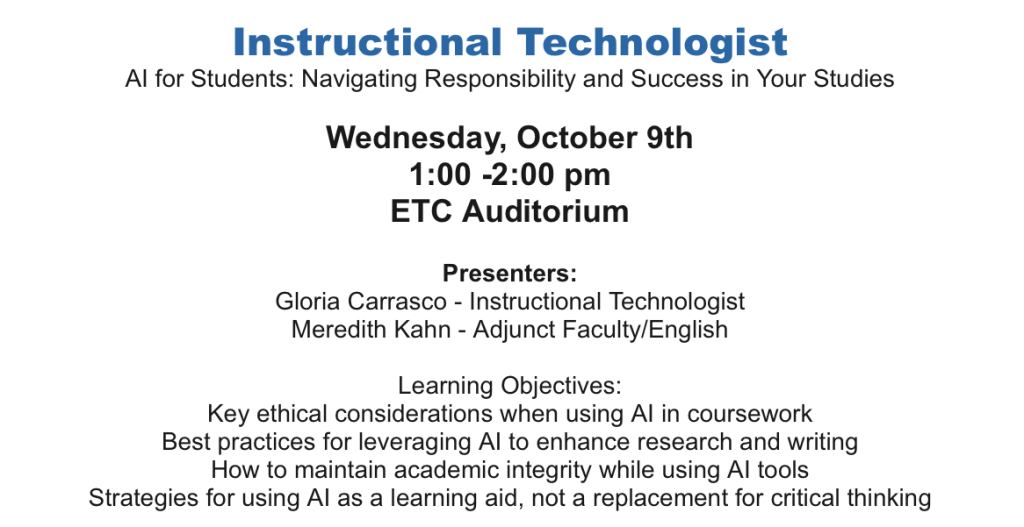

Holy Family is offering students a free Perplexity Pro plan if enough students sign up with their school email in the coming weeks. Perplexity is an education-oriented program akin to ChatGPT and Microsoft Copilot. It can be used for ethical and unethical purposes, such brainstorming for a paper outline — or, as a peer tested, to write a whole paper based on a prompt, all within a few seconds. Additionally, Holy Family has made more attempts to get ahead of this rising AI tide, arguably too late, through the organization of a free seminar to teach students ethical, real-world usage of AI. As its poster (shown later in this article, along with more information on the event) states, the event organizers also want to gain information about student usage of AI thus far; this data will surely be interesting to me, and something that will provide a jumping-off point for future ventures into writings on student perspectives on AI. There are also grant opportunities (shown above) for students interested in learning AI usage principles and literacy. This new approach can be viewed as Holy Family staying hip to new trends, attempting to stay marketable in a changing higher educational market. This signals a shift from school administration simply attempting to curb usage of AI, which is nigh impossible, to attempting to direct and control its usage, as well as inform students of ethical usage standards.

To really learn about how AI is affecting the HFU community, I needed to see what those on the ground thought of such advancements. To gauge the sentiment about AI at Holy Family, I interviewed several individuals, including professors, to gain their insights into how they were dealing with AI usage in their subject area and courses.

I chose to focus on interviewing business and English professors. Business professors are embracing AI usage, viewing it as the future of the field, whereas English is a subject that has been particularly hard-hit by AI generated papers, with reluctance to use it at all. Despite the crucial literacy skills required of all students, something AI cannot substitute, this technology is being readily used in both subjects.

I conversed through email with Dr. Luane Amato, a professor in the School of Business, about her experiences with AI. Dr. Amato, along with the Associate Dean of the School of Business, Chris Schoettle, has authored two textbook chapters on AI, “Best Practices in AI for Higher Education” and “A Research Study in AI.” Unsurprisingly, Dr. Amato, along with her Business School colleagues, recognize the importance of AI in the field, and are readily adapting to its use to best prepare their students for future careers. Dr. Amato explains the significance of this preparation, writing, “future employers will expect their new hires to have knowledge and expertise in its use to better support the organization.” This future is coming faster than would have been expected, with advancing in technology exponentially increasing.

Though Dr. Amato feels that AI is “an extremely useful tool” she also cautioned that students using it “must have knowledge of the challenges, pitfalls, and an awareness of the different AI resources that are recommended to use.” These challenges and pitfalls include inaccurate information, as well as finding the proper ways to include AI in work, while also retaining a sense of the human aspect inherent in any work. Dr. Amato discussed how she responded to students using AI unethically, including simply copy-pasting generated text, and her effective strategies for correcting this behavior. provided education on the proper use of AI with education on academic integrity and plagiarism. Some of the ways in which the School Business of is responding to such improper uses of AI include:

- Revised assignments to include components that would not be easy to answer using AI – that includes presentation assignments, assignments that require a personal assessment or emotional response.

- Conducted a research study on how students use AI and their understanding of ethics.

- Revised course assignments to include ethics education and an ethical assessment.

This deeper, critical thinking and self-reflection on the part of students about AI usage is key. They may not realize that what they are doing is wrong, and is still considered plagiarism and academic dishonesty, without the proper education of this new form of technology. Just as we all learn how to cite information for research and analysis, so must AI research and citation skills be taught.

Dr. Amato has an overall positive view of AI, despite some shortcomings, stating “I have embraced AI and its usefulness. It is important for higher education educators to become aware and educated, and revamp their course approach, navigation, grading, and assessments to include AI education and assignments that are more creative.” Furthermore, she does feel that education as a whole will surpass this rocky period of overcoming the initial difficulties posed by this new technology, stating, “Education has no choice but to adapt… I have embraced AI and its usefulness. It is important for higher education educators to become aware and educated, and revamp their course approach, navigation, [and] grading.” Dr. Amato is including several aspects of AI in her courses, including:

- Ethics education module and assessment of students’ perceptions and understanding of the ethical usage of AI.

- Giving assignments that directly use AI.

- Increasing knowledge and usage of AI resources and tools available.

- Revision of assignments to avoid direct misuse of AI resources.

I would like to thank Dr. Amato for taking the time to provide thoughtful answer about the impact of AI on Business Education at our school and the ways in which they are adapting.

Next, I sat down with Dr. McClain, an English professor, and Dr. McClain graciously illuminated some of the changing dynamics of AI usage on campus. Dr. McClain uses these tools to generate ideas for her class, displaying them in her class to students and modeling how to use AI to explain topics, with caution about potentially inaccurate information, as well as a brainstorming and outlining tool to get started on a paper or project. Dr. McClain has had issues with students using AI blatantly, especially when these technologies gained mainstream, widespread use in the past few years; students turned in papers that were blatantly AI-generated and, when privately confronted about the matter, admitted to it. Due to AI’s newfangled status for these uses at the time, Dr. McClain allowed the students to redo the papers.

Now that AI has been around for years and the syllabi for her courses have clear guidelines on AI usage and originality of submitted work, she does not give this leeway. When I questioned her about AI checkers and their usefulness, Dr. McClain stated she could not simply use the AI checker developed by ChatGPT’s owner OpenAI, as it would not be enough proof in and of itself to file an academic dispute against the student but would need more concrete evidence, like an admittance from the student. This calls into question the possibility, however small, of false positives from this system, which could spell educational ruin for an otherwise honest student.

I would like to thank Dr. McClain for providing pertinent information about the positives of AI for use in this article.

I gained an anonymous source in the form of an English professor who was much less open to the idea of AI than Dr. McClain, and who took a much harsher stance on its use. This professor stated that they too receive submissions that seem AI generated due to their vagueness, neglecting requirements from the rubric, or provides too much information about a simple topic. In this case, this professor uses several AI checkers on the paper, and, when a consensus of positive AI usage is reached on all of them, they email the student with these results, Sometimes, the use of an app like Grammarly, a proofreading and grammar checker app that can be built into browsers, can be to blame. Grammarly has implemented AI features that can reword sections of a paper, taking away the original voice of the author, in this case, the student. This professor suggests not using this app, or at most using it for correcting small grammatical errors, like punctuation or spelling.

AI, as this professor notes, can increase the “push to make employees more productive, to get more production from the same employee.” Professors are being pushed to use AI so that their “teaching is now more efficient [easier… therefore lower their cost of delivering courses to their clients (students)” by having professors teach more courses for the same pay. This makes sense in purely economic terms, but the implication is that the use of this technology is not concerned with best academic outcomes for students.

A great question raised by this professor concerns expertise within the field of education. We, as students, trust our professors because they have invested thousands of hours into rigorous education, earned degrees in their subject matter, and are always willing to learn and grow as educators. However, AI, in the words of this professor, may spell “the death of expertise,” raising the question: “Why do we need experts when we have AI?”. The very human connection we have with our professors is important, invaluable even. Though we could learn the same information from a research journal, a webinar, a documentary, or, yes, AI, having in-depth discussions with professors that we learn and grow from is not so easily replaced, no matter what administration might push for economic reasons. This raises even more questions of the for-profit nature of higher-education throughout this country, but this is a topic for another day.

AI, as this anonymous professor noted, takes away a key human aspect of academia, something that we have surely experienced in online or asynchronous classes. Education is just as much about learning material as it is about getting in front of people and talking. This anonymous professor worries that the gap between “students who have superior skills and those that need to further develop skills in written and oral [yes, in person] communication, critical thinking, problem solving, textual analysis, digital literacy, and creativity” will only widen, as those that need to hone their skills will be stifled by the crutch of AI.

This professor has also changed their course syllabi, assignments, and rubrics to make it harder to use AI, including more personal and emotional ties, similar to that stated by Dr. Amato; these are things that AI cannot replicate, as it takes what some refer to as a middle-ground approach to contentious topics, trying to argue both sides, when most individuals would gravitate towards one or the other. However, though they view AI in a mostly negative light, they did that they have used AI to help develop quizzes for their classes, but the manual aspect comes in by reviewing the questions that AI generates, taking out questions that do not fit with what the topic is, and reading the text and making notes of what is most important to quiz on, to make sure that AI achieves this.

I would like to thank this anonymous professor for sharing their valuable insights on the downsides of AI on campus.

Even the most ardently opposed to AI are being pushed into using and adapting to it.

As we read from Dr. Amato, the business department appears on the cutting edge of using AI effectively in education, but this can be an issue when other departments, like English, are attempting to teach their students to use this burgeoning technology as little as possible, and instead learn to write using their own unique voice and thoughts. Business is a field that is already embracing this tool for data analysis and more, whereas English, itself an art form, is less open to these advancements, just as artists like painters and drawers scoff at AI-generated art. Different fields require different applications and nuanced uses of this new technology. It is clear that AI is not simply going away, so taking a middle-ground approach that teaches how to appropriately use it within each discipline is important, recognizing the strengths and weaknesses of it as a tool for use in different fields.

In regards to student use of AI on campus, as a peer tutor for writing at Tiger Tutoring, I’ve witnessed firsthand unethical usage of AI, especially by English Language Learners. I think that this is particularly alarming because it shows the lack of support for these students which demonstrates a need outside of the tutoring department and instead in the realm of ESL specialists. These students, from my experience, do not know that what they are doing is wrong. I have seen Grammarly extensions being used to highly edit papers, taking whole sentences and paragraphs and completely rewording them, which loses the original message, and voice , of the This takes text and rewords it to make it clearer, use proper grammar, and improve word choice. This eliminates the style and unique voice from the writers.

AI is not the root problem in this scenario, but instead a band-aid for a much deeper wound, one that requires systemic changes past education for students about what not to do, but proactive measures for administration and faculty on how to help these students so that they do not feel that they must use AI to succeed. This will only hurt these students in the long term, when they cannot simply rely on AI behind their desk, but most think on the fly, talk in front of others, and brainstorm and write without the use of technology. These students clearly need more support to write in a language they are still learning, but simply copy-pasting into AI is not the tool for that purpose. What I’ve just described encapsulates a large part of the issue with AI usage, that it does not teach the skills, the critical thinking and problem-solving techniques, that are necessary for success in careers. Instead, it is a shortcut that can too easily become the only path forward for those that do not have the proper support, the foundational skills, for success in university and beyond.

Even though students are abusing AI, does not mean that every use case is inherently bad. In fact, there can be many ways of using this technology as another reference, another resource, with the proper amount of care and discretion, much of which must be explicitly taught and workshopped. It will likely take education time to catch up, as it did with other forms of technology that are now well-integrated into almost every classroom nationwide. AI will have to also change itself, adapting uses for education, working alongside schools, and teaching educators and administrators how to effectively use it.

As an alternative to resorting to AI to finish, or fully draft, a paper for you, or help with any assignment, test prep, and more, visit the many skilled peer and professional tutors at Tiger Tutoring. This will ensure that you keep your academic integrity, uphold the school’s motto, and learn the proper way of doing things, so that in the future you will be able to do it on your own. Lastly, reach out to professors, they are there to help, especially during office hours, virtual meetings, and after-class. There is also PearDeck 24/7 online tutoring through each course’s Canvas shell. Check the left-hand sidebar, where you find such sections as modules, discussions, and assignments, there should be a link to “TutorMe (Online Tutoring)”, or ask the IT Help Desk or Tiger Tutoring for support on receiving online tutoring.

The generation of current high school and college students is much more tech savvy than our predecessors, so in our careers we will find ways to maximize productivity and work smarter using technologies such as AI; however, we should not lose the human element of our jobs — be that nursing, education, business, or STEM. These tools should help, not replace, and instead assist, us. We should not allow ourselves to be hindered in connecting and communicating with one another in the forms that have happened for millennia.

This is not to say to abandon AI completely. The same arguments made against AI could be made about typing out papers instead of writing, teaching touch typing over cursive penmanship, using e-books instead of paperbacks, using an online archive database instead of a physical library, or using Google instead of an encyclopedia, dictionary, or thesaurus when writing and researching.

I have used AI to brainstorm ideas for catchy article titles and explain some complex topics about science in my free time, as well as helping explain theorems and the steps in a problem in my math tutoring, as well as using it to check answers and work backwards from there. These are uses that do not detract from one’s own work, but simply add to it and enhance it, providing another tool. I believe that we should be preparing students to confront this new technology appropriately and with cautiousness for the power that it can hold. However, we can hold one another accountable by helping each other; if you see someone in your class resorting to AI or unsure of how to progress on an assignment, help them out, or direct them towards the professor, or Tiger Tutoring. Tutors are trained in how to effectively teach study skills, outline, draft, and revise papers, as well as using AI in ethical manners.

There is no clear-cut answer to the question I posed at the beginning of this article. In fact, one could say that our integrity has been at risk this whole time, from various sources, be it those being paid to write papers for others, cheating on online exams, or plagiarism — all are academically dishonest, and students are taught to avoid them, but they still occur. AI is just another potential confrontation that education must, and will, overcome and adapt to. However, I do believe that there will be steps forward, and backward, over time. This will not be a clear path to progression, but one fraught with difficulties that have us question what education means and what it looks like in an ever-evolving, globalized world. But this will not spell the ruin of our school, far from it. Just as the car revolutionized personal transportation and changed the landscape of our country, or the personal computer and world wide web revolutionized information-sharing and global-connectedness, AI will mark a new passage in the ongoing technological revolution, one that will be looked back on as a messy time, but one that, when the smoke clears, led to gains.

We have indeed opened Pandora’s box of artificial intelligence; there is no going back to the “before times.” But that does not mean all is lost. We still have the opportunity to use AI as another tool, just as we use Google, Canvas, and Wikipedia. There will be more challenges in ethically using AI, and challenging concerns outside of the realm of academia, such as deepfakes, but as always, humans will adapt. As a future educator myself, I strive to learn how to use AI ethically and efficiently so I can set a good example to my students, all the while staying up-to-date on knowledge of this technology.

Zeb is a Junior Secondary Education English major. He graduated from Bucks County Community College with an associate’s degree in Secondary Education History. In his free time, he enjoys listening to and making music, hanging out with his four cats, spending time in nature, and weightlifting.

Feature photo courtesy of jalees

Leave a comment